Neural Nets and Symbolic Reasoning

Second Semester 2012/2013, 6 EC, Block A

Lecturer: PD Dr. Reinhard Blutner

ILLC, University

of Amsterdam

Lectures: Monday 9-11

in A1.10; Wednesday 15-17 in B0.201.

Office Hours: by appointment

Science Park

107 (Nikhef), Room F1.40

Outline

Parallel

distributed processing is transforming the field of cognitive science. In this

course, basic insides of connectionism (neural networks) and classical

cognitivism (symbol manipulation) are compared, both from a practical

perspective and from the point of view of modern philosophy of mind. Discussing

the proper treatment of connectionism, the course debates common

misunderstandings, and it claims that the controversy between connectionism and

symbolism can be resolved by a unified theory of cognition – one that assigns

the proper roles to symbolic computation and numerical neural computation.

(1) Classical

cognition, (2) Neural nets and parallel distributed processing, (3) The connectionist-symbolist

and emergentist-nativist debates, (4) Connectionism and the mind body problem (5)

Towards a unifying theory.

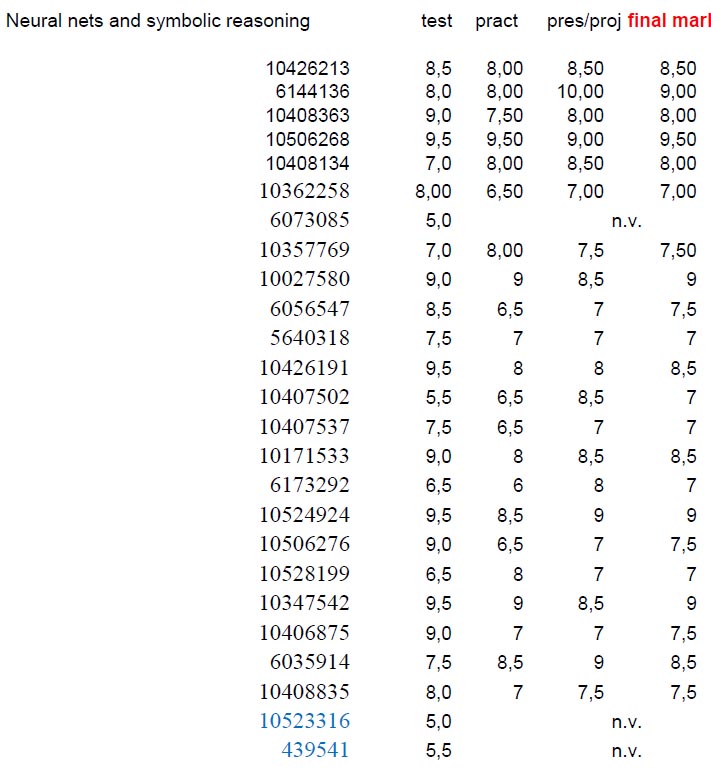

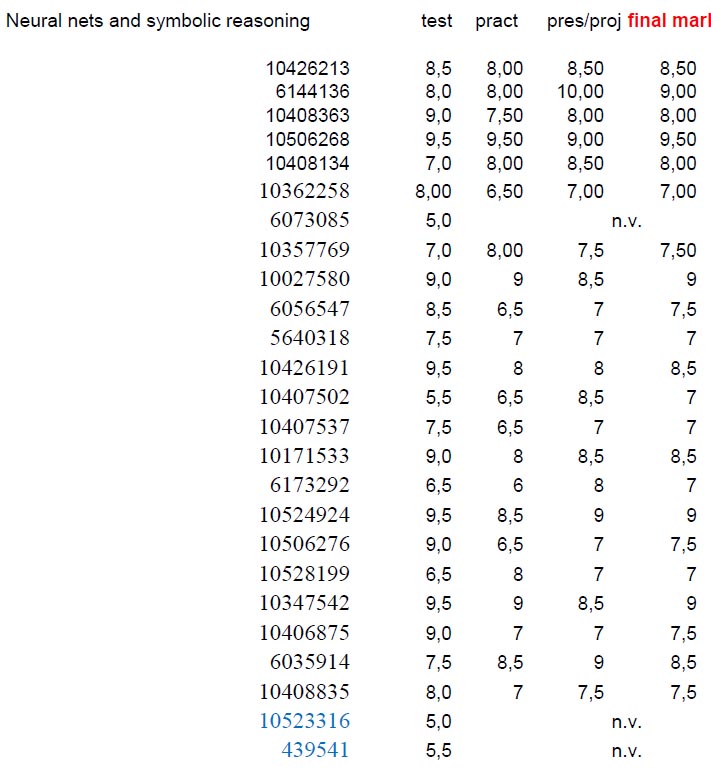

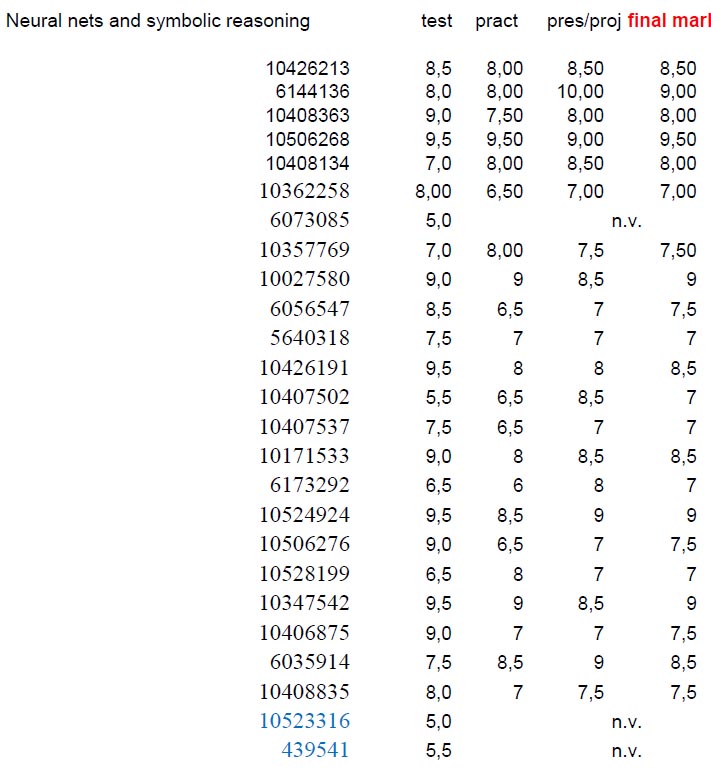

Examinations

This course will be graded based on

- A powerpoint presentation and/or a term research paper is

40% of course

grade.

Final deadline for the paper: April 3 (grade is reduced

if work is late: -1 per day!)

- Written test (=45 minute exam), count 30%.

- Recommended homework: see Exercise. The homework is

closely related to the written test.

- Practical exercises:

Submit the

tlearn exercise 8, count

30%. (I checktlearn exercise 8 only, not the other tlearn

exercises) Final deadline for tlearn exercise 8: April 3 (grade is

reduced if work is late: -1 per day!)

Schedule

Part

A

Please tell me the topic of your

essay/presentation before week 6 (March 10) per email!

Part

B

-

Week 6a. Opening the Connectionist-Symbolist Debate

[slides];

Obligatory readings: Jerry A. Fodor and Zenon W.

Pylyshyn. Connectionism and Cognitive Architecture: A Critical

Analysis.

J. Elman (1991).

Distributed Representations, Simple Recurrent Networks, and Grammatical

Structure.

Student presentation: Philip

Schulz: Automatic formation of topological maps (Kohonen)

Week 6b. The nature of systematicity [slides].

Invited guest presentation: Gideon Borensztajn: What the systematicity of language reveals about cortical connectivity, and implications for connectionism. Related paper click here.

PhD of Gideon available here:

pdf

(6 MB) /

pdf with cover page (9 MB) .

Student presentation:

Norbert Heijne & Anna Keune: Modelling logical inferences: Wason's selection task and connectionism.

-

Week 7a. Applying neural

networks to problems of language, cognition and artificial intelligence.

Student presentation: Peter

Schmidt: Echo state machines.

Student presentation:

Marieke Woensdregt:

Subsymbolic language processing using a central control network.

Week 7b. Third generation

networks: Assemblies, synfire

chains, echo/fluid machines.

Student presentation: Patrick de Kok: Neural

networks and geometric algebra.

Student presentation: Paris Mavromoustakos Blo:

New solutions to the binding problem.

Invited guest presentation by Hartmut Fitz (MPI Nijmegen): A primer to reservoir computing. For preparation you can read the

paper available here:

http://minds.jacobs-university.de/sites/default/files/uploads/papers/2261_LukoseviciusJaeger09.pdf.

Here are the slides.

Obligatory reading: Abeles, Heyon, Lehmann (2004):

Modeling

Compositionality by Dynamic Binding of Synfire Chains

-

Retests, Exam, Consultations:

March 25 from 13.30 - 16 in B0.201 (Science Park). Please, send me an

email if you will take the retest or a consultation.

Possible

topics for projects/essays

- What is systematicity?

Tim van Gelder and Lars Niklasson:

Classicalism

and Cognitive Architecture

Blutner, Hendriks, de Hoop, Schwartz:

When

Compositionality Fails to Predict Systematicity

- Modelling conceptual combination

Edward E. Smith, Daniel N.Osherson, Lance J. Rips, and

Margaret Keane:

Combining

Prototypes: A Selective Modification Model

If prototype theory is to be

extended to composite concepts, principles of conceptual composition must be

supplied. This is the concern of the present paper. In particular, we will

focus on adjective-noun conjunctions such as striped apple and not

very red fruit, and specify how prototypes for such conjunctions can be

composed from prototypes for their constituents. While the specifics of our

claims apply to only adjective-noun compounds, some of the broader

principles we espouse may also characterize noun-noun compounds such as dog

house.

Barry Devereux & Fintan Costello:

Modelling

the Interpretation and Interpretation Ease of Noun-Noun Compounds Using a

Relation Space Approach to Compound Meaning

How do people interpret

noun-noun compounds such as

gas

tank or penguin movie?

In this paper, we present a computational model of

conceptual combination. Our model of conceptual

combination introduces a new method of representing

the meaning of compounds: the relations used to

interpret compounds are represented as points or

vectors in a high-dimensional relation space. Such

a representational framework has many advantages

over other approaches. Firstly, the highdimensionality

of

the space provides a detailed description of the

compound’s meaning; each of the space’s dimensions represents

a semantically distinct way in which compound

meanings can differ from each other. Secondly, representation

of compound meanings in a space allows us to use a

distance metric to measure how similar of different

pairs of compound meanings are to each other. We

conducted a corpus study, generating vectors in

this relation space representing the meanings of a

large, representative set of familiar compounds. A computational

model of compound interpretation that uses these

vectors as a database from which to derive new

relation vectors for new compounds is presented.

- Relating and unifying connectionist

networks and propositional logic

Gadi Pinkas (1995).

Reasoning, connectionist nonmonotonicity and learning in

networks that capture propositional knowledge. [1,6 MB!]

Reinhard Blutner (2005):

Neural Networks,

Penalty Logic and Optimality Theory

- Symbolic knowledge extraction from trained neural networks

A.S. d’Avila Garcez, K. Broda, & D.M. Gabbay (2001).

Symbolic

knowledge extraction from trained neural networks: A sound approach.

Although neural

networks have shown very good performance in many application domains, one

of their main drawbacks lies in the incapacity

to provide an explanation for the underlying reasoning

mechanisms. The “explanation capability” of neural

networks can be achieved by the extraction of symbolic

knowledge.

In this paper, we present a new method of extraction that captures

nonmonotonic rules

encoded in the

network, and prove that such a method is sound.

- Natural deduction in connectionist systems

William Bechtel (1994):

Natural Deduction

in Connectionist Systems

The relation between logic and thought

has long been controversial, but has recently influenced theorizing about

the nature of mental processes in cognitive science. One prominent tradition

argues that to explain the systematicity of thought we must posit

syntactically structured representations inside the cognitive system which

can be operated upon by structure sensitive rules similar to those employed

in systems of natural deduction. I have argued elsewhere that the

systematicity of human thought might better be explained as resulting from

the fact that we have learned natural languages which are themselves

syntactically structured. According to this view, symbols of natural

language are external to the cognitive processing system and what the

cognitive system must learn to do is produce and comprehend such symbols. In

this paper I pursue that idea by arguing that ability in natural deduction

itself may rely on pattern recognition abilities that enable us to operate

on external symbols rather than encodings of rules that might be applied to

internal representations. To support this suggestion, I present a series of

experiments with connectionist networks that have been trained to construct

simple natural deductions in sentential logic. These networks not only

succeed in reconstructing the derivations on which they have been trained,

but in constructing new derivations that are only similar to the ones on

which they have been trained.

- Modelling logical inferences: Wason's selection task and connectionism

Steve J. Hanson, Jacqueline P. Leighton ,

& Michael R.W. Dawson: A parallel

distributed processing model of Wason’s selection task

Three parallel distributed

processing (PDP) networks were trained to generate the ‘p’, the ‘p and

not-q’ and the ‘p and q’ responses, respectively, to the conditional

rule used in Wason’s selection task. Afterward, each trained network

was analyzed for the algorithm it developed to learn the desired response to

the task. Analyses of each network’s solution to the task suggested a ‘specialized’

algorithm that focused on card location. For example, if the desired

response to the task was found at card 1, then a specific set of hidden

units detected the response. In addition, we did not find support that

selecting the ‘p’ and ‘q’ response is less difficult than selecting

the ‘p’ and ‘not-q’ response. Human studies of the selection task

usually find that participants fail to generate the latter response, whereas

most easily generate the former. We discuss how our findings can be used to

(a) extend our understanding of selection task performance, (b) understand

existing algorithmic theories of selection task performance, and (c)

generate new avenues of study of the selection task.

- Infinite RAAM: A principled connectionist substrate for cognitive modelling

Simon Levy and Jordan Pollack (2001):

Infinite

RAAM

Unification-based approaches have come to

play an important role in both theoretical and applied modeling of cognitive

processes, most notably natural language. Attempts to model such processes

using neural networks have met with some success, but have faced serious

hurdles caused by the limitations of standard connectionist coding schemes.

As a contribution to this effort, this paper presents recent work in

Infinite RAAM (IRAAM), a new connectionist unification model. Based on a

fusion of recurrent neural networks with fractal geometry, IRAAM allows us

to understand the behavior of these networks as dynamical systems. Using a

logical programming language as our modeling domain, we show how this

dynamical-systems approach solves many of the problems faced by earlier

connectionist models, supporting unification over arbitrarily large sets of

recursive expressions. We conclude that IRAAM can provide a principled

connectionist substrate for unification in a variety of cognitive modeling

domains.

- Encoding nested relational structures in fixed width vector

representations.

Tony A. Plate (2000):

Analogy

retrieval and processing with distributed vector representations

Holographic

Reduced Representations (HRRs) are a method for encoding nested relational

structures in fixed width vector

representations. HRRs encode relational structures as vector representations

in such a way that the superficial

similarity of the vectors reflects both superficial and structural

similarity of the relational

structures. HRRs also support a number of operations that could be very

useful in psychological models of

human analogy processing: fast estimation of superficial and structural

similarity via a vector

dot-product; finding corresponding objects in two structures; and chunking

of vector representations. Although

similarity assessment and discovery of corresponding objects both

theoretically take exponential time

to perform fully and accurately, with HRRs one can obtain approximate

solutions in constant time. The

accuracy of these operations with HRRs mirrors patterns of human

performance

on analog retrieval and processing tasks.

- New solutions to the binding problem

Abeles, Heyon, Lehmann (2004):

Modeling

Compositionality by Dynamic Binding of Synfire Chains

This paper examines

the feasibility of manifesting compositionality by a system of synfire

chains. Compositionality is the ability to

construct mental representations, hierarchically, in terms of parts and

their relations. We show that synfire chains

may synchronize their waves when a few orderly cross links are available.We

propose that synchronization among synfire

chains can be used for binding component into a whole. Such synchronization

is shown both for detailed simulations, and

by numerical analysis of the propagation of a wave along a synfire chain.

We show that global inhibition may prevent spurious

synchronization among synfire chains. We further show that

selecting

which synfire chains may synchronize to which others may be improved by

including inhibitory neurons in the synfire

pools. Finally we show that in a hierarchical system of synfire chains, a

part-binding problem may be resolved, and

that such a system readily demonstrates the property of priming. We compare

the properties of our system with the

general requirements for neural networks that demonstrate compositionality.

See also: van der Velde (2005): Neural blackboard

architectures

- The role of symbolic grounding within embodied cognition / Grounding

symbols with neural nets

Michael L. Anderson:

Embodied

Cognition: A field guide

Stevan Harnad:

Grounding

symbols in the analog world with neural nets -- A hybrid model (Target

Article on Symbolism-Connectionism)

Bruce J. MacLennan:

Commentary

on Harnad on Symbolism-Connectionism

Stevan Harnad:

Symbol Grounding and the

Symbolic Theft Hypothesis

- Subsymbolic language processing using a central control network

Risto Miikkulainen: Subsymbolic case-role analysis of sentences with embedded clauses

A distributed neural network model called

SPEC for processing sentences with recursive relative clauses is described.

The model is based on separating the tasks of segmenting the input word

sequence into clauses, forming the case-role representations, and keeping

track of the recursive embeddings into different modules. The system needs

to be trained only with the basic sentence constructs, and it generalizes

not only to new instances of familiar relative clause structures, but to

novel structures as well. SPEC exhibits plausible memory degradation as the

depth of the center embeddings increases, its memory is primed by earlier

constituents, and its performance is aided by semantic constraints between

the constituents. The ability to process structure is largely due to a

central executive network that monitors and controls the execution of the

entire system. This way, in contrast to earlier subsymbolic systems, parsing

is modelled as a controlled high-level process rather than one based on

automatic reflex responses.

- Implicit learning

Bert Timmermans & Axel Cleeremans:

Rules

vs. Statistics in Implicit Learning of Biconditional Grammars

A significant part of everyday learning occurs incidentally

— a process typically described as implicit learning. A central issue in

this domain and others, such as language acquisition, is the extent to which

performance depends on the acquisition and deployment of abstract rules.

Shanks and colleagues [22], [11] have suggested (1) that discrimination

between grammatical and ungrammatical instances of a biconditional

grammar requires

the acquisition and use of abstract rules, and (2) that training conditions — in particular whether instructions orient

participants to identify the relevant rules or not — strongly influence the extent to

which such rules will be learned. In this paper, we show (1) that a Simple

Recurrent Network can in fact, under some conditions, learn a biconditional

grammar, (2) that training conditions indeed influence learning in simple

auto-associators networks and (3) that such networks can likewise learn about

biconditional grammars, albeit to a lesser extent than human participants.

These findings suggest that mastering biconditional grammars does not

require the acquisition of abstract rules to the extent implied by

Shanks and colleagues, and that performance on such material may in fact be based,

at least in part, on simple associative learning mechanisms.

- Echo state machines

Herbert Jaeger: Discovering multiscale

dynamical features with hierarchical Echo State Networks:

Many time series of practical relevance data have

multi-scale characteristics. Prime examples are speech, texts, writing, or gestures. If

one wishes to learn models of such systems, the models must be capable

to represent dynamical features on different temporal and/or spatial

scales. One natural approach to this end is hierarchical models, where higher

processing layers are responsible for processing longer-range (slower, coarser)

dynamical features of the input signal. This report introduces a

hierarchical architecture where the core ingredient of each layer is an echo state

network. In a bottom-up flow of information, throughout the architecture

increasingly coarse features are extracted from the input signal. In a

top-down flow of information, feature expectations are passed down. The

architecture as a whole is trained on a one-step input prediction task by

stochastic error gradient descent. The report presents a formal specification

of these hierarchical systems and illustrates important aspects of its functioning

in a case study with synthetic data.

- Conceptual spaces and compositionality

Peter Gärdenford & Massimo Warglien:

Semantics, conceptual spaces

and the meeting of minds

We present an account of semantics that is

not construed as a mapping of language to the

world, but mapping between individual meaning spaces. The

meanings of linguistic entities are established via a “meeting of minds.” The concepts in the

minds of communicating individuals are modeled as convex regions in conceptual spaces. We outline a

mathematical framework based on fixpoints in continuous mappings between conceptual spaces

that can be used to model such a semantics. If concepts are convex, it will in general be

possible for the interactors to agree on a joint meaning even if they start out from different

representational spaces. Furthermore, we show by some examples that the approach helps explaining the

semantic processes involved in the composition of expressions.

- Automatic

formation of topological maps

Teuvo Kohonen:

Self-organized foration of topologically

correct feature maps.

This work contains a theoretical study and computer simulations of a new self-organizing process. The principal discovery is that in a simple network of adaptive physical elements which receives signals from a primary event space, the signal representations are automatically mapped onto a set of output responses in such a way that the responses acquire the same topological order as that of the primary events. In other words, a principle has been discovered which facilitates the automatic formation of topologically correct maps of features of observable events. The basic self-organizing system is a one- or twodimensional array of processing units resembling a network of threshold-logic units, and characterized by short-range lateral feedback between neighbouring units. Several types of computer simulations are used to demonstrate the ordering process as well as the conditions under which it fails.

- Neural networks for simulating statistical models of language

Yoshua Bengio, Réjean Ducharme, Pascal Vincent, and Christian Jauvin:

A Neural

Probabilistic Language Model

Holger Schwenk, Daniel Dchelotte and Jean-Luc Gauvain:

Continuous Space

Language Models for Statistical Machine Translation

Learning Pattern Recognition Through Quasi-Synchronization of Phase Oscillators

Patrick Suppes et al.

The idea that

synchronized oscillations are important in cognitive tasks is receiving

significant attention. In this view, single neurons are no longer elementary

computational units. Rather, coherent oscillating groups of neurons are seen

as nodes of networks performing cognitive tasks. From this assumption, we

develop a model of stimulus-pattern learning and recognition. The three most

salient features of our model are: 1) a new definition of synchronization;

2) demonstrated robustness in the presence of noise; and 3) pattern learning.

- Analogical inference, scheme induction and

relational resoning

John E. Hummel & Keith J. Holyoak:

Relational Reasoning in a Neurally-plausible Cognitive Architecture: An

Overview of the LISA Project.

Human mental

representations are both flexible and structure-sensitive—properties that

jointly present challenging design requirements for a model of the cognitive

architecture. LISA satisfies these requirements by representing relational

roles and their fillers as patterns of activation distributed over a

collection of semantic units (achieving flexibility) and binding these

representations dynamically into propositional structures using synchrony of

firing (achieving structure-sensitivity). The resulting representations

serve as a natural basis for memory retrieval, analogical mapping,

analogical inference and schema induction. In addition, the LISA

architecture provides an integrated account of effortless “reflexive” forms

of inference and more effortful “reflective” inference, serves as a natural

basis for integrating generalized procedures for relational reasoning with

modules for more specialized forms of reasoning (e.g., reasoning about

objects in spatial arrays), provides an a priori account of the limitations

of human working memory, and provides a natural platform for simulating the

effects of various kinds of brain damage.

Books

used to prepare the lecture

- Wilhelm Bechtel (2002). Connectionism and the Mind. Oxford,

Blackwell Publishers.

- Gary F. Markus (2001). The Algebraic Mind. Integrating Connectionism and

Cognitive Science. The MIT Press.

- Kim Plunkett & Jeffrey L. Elman (1997). Exercises in Rethinking

Innateness: A Handbook for Connectionist Simulations. The MIT Press.

- Andy Clark (1989).

Microcognition: Philosophy, cognitive

science, and parallel distributed processing. The MIT Press.

- Paul

Smolensky and Geraldine Legendre (2006), The

Harmonic Mind: From neural computation to Optimality Theoretic Grammars.

Cambridge, Blackwell.

Practical Instructions: Tlearn

Tips for the installation of T-learn for Windows XP

(thanks go to Dewi!)

1. Go to properties on the menu. It will load a box, click on the last "tab".

You can open T-learn in a previous version of windows (98 will work), thus

preventing it from crashing!

2. When a new project is created, or an existing one is opened, if the

path to the file is longer than a length of approx. 50 characters and/or

contains spaces, the program crashes or closes unexpectedly, making it

impossible to use. Please also note that this is very likely to be the case if

the user runs Windows 2000 or XP, and the project files are on the desktop or

the "My Documents folder" (absolute path would be similar to "C:\Documents and

Settings\YourName\My Documents"). An easy solution to the problem is to only

open/create project files for which the path is relatively small and contains no

spaces (eg. c:\tlearn). I would recommend running the program (tlearn.exe) from

a similar path as well.

Tips for setting the parameters (thanks go to Ben!)

learn.exe automatically sets the 'log error every' parameter to 100. The

consequence of this is that the errors you will see are still relatively high

and a 100 hidden node network hardly improves. Best, to set set this parameter

to 1. The parameter is in training options and then more.

Related

Websites